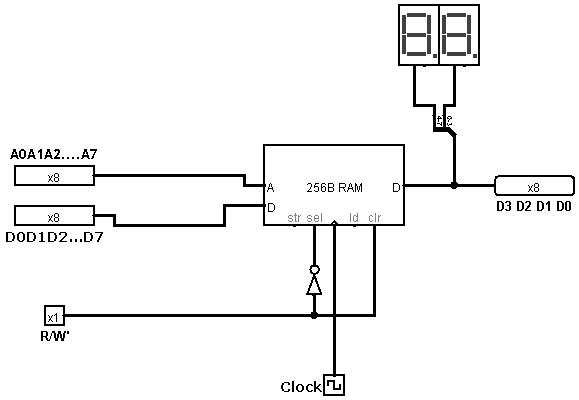

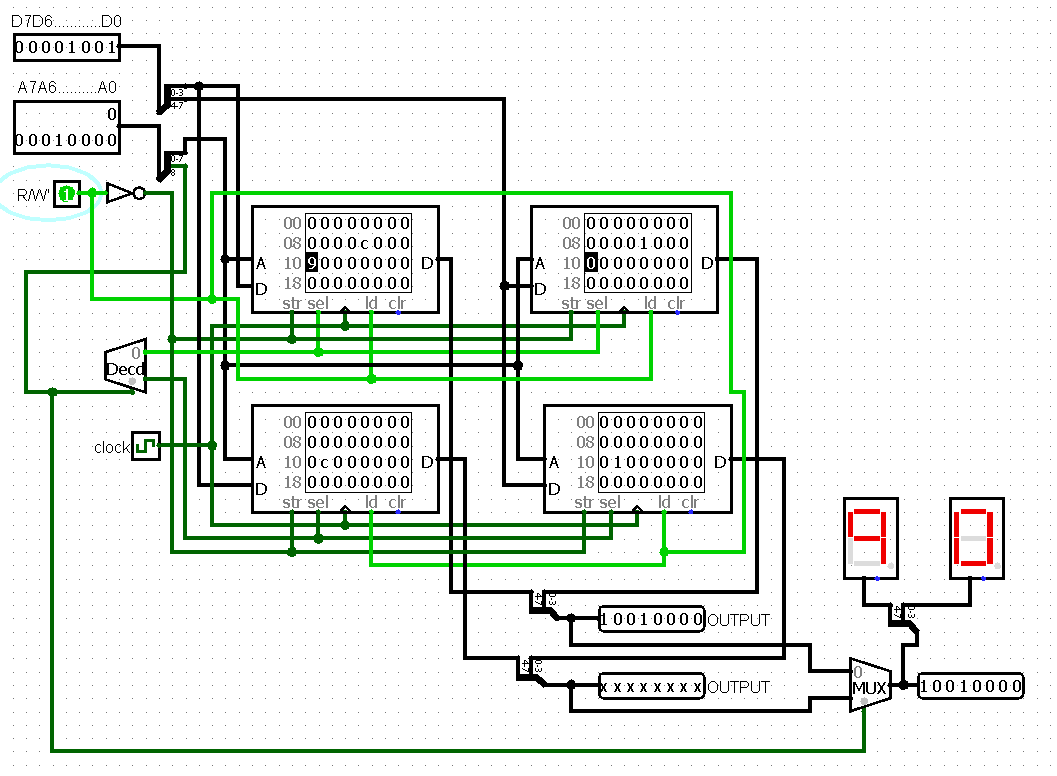

In computer systems, memory generally refers to Random Access Memory (RAM), which is used to temporarily store data that is actively being used or processed by the system. RAM is capable of storing and retrieving n-bits of data, where "n" indicates the bit width of the system's memory operations (e.g., 8-bit, 16-bit, 32-bit, etc.). When data is input into the system, it is assigned a unique memory address. Each memory location in RAM can hold a specific number of bits of data, and the R/W (Read/Write) control signal determines whether data is being read from or written to a particular memory address. This ability to access any location directly (hence "random access") is what distinguishes RAM from other types of memory like sequential storage devices (e.g., hard drives or tapes), which require data to be accessed in a fixed order.

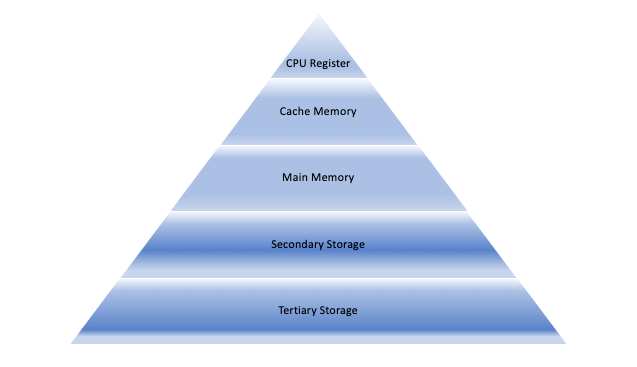

Memory hierarchy is a concept in computer architecture that organizes memory storage based on various characteristics such as access speed, size, and cost. The idea is to provide fast access to the most frequently used data while managing larger, slower storage devices for less frequently used information. Memory hierarchy structures typically have multiple levels, each offering different trade-offs in terms of response time, capacity, and cost. These levels are organized from the fastest, smallest, and most expensive memory at the top, to the slowest, largest, and cheapest memory at the bottom.